Project Description

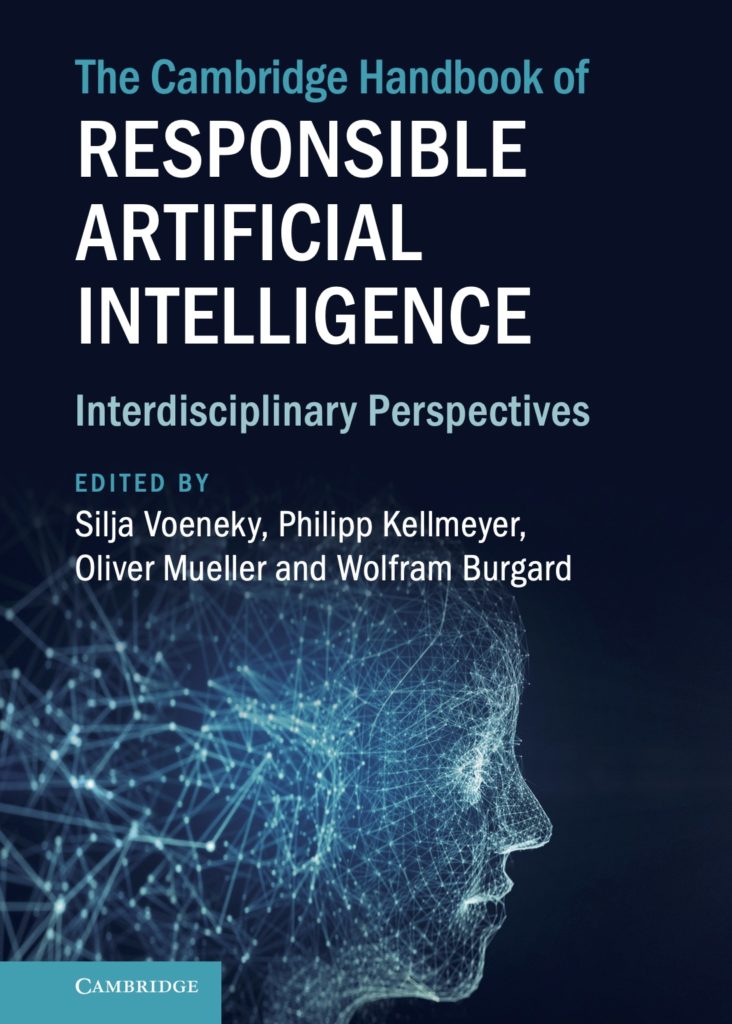

The Saltus Group has edited The Cambridge Handbook of Responsible Artificial Intelligence – Interdisciplinary Perspectives which will be published in September 2022. It comprises 28 chapters written by participants of the virtual conference “Global Perspectives on Responsible AI” (2020, Freiburg Institute for Advanced Studies).. It will be available here.

The book will provide conceptual, technical, ethical, social and legal perspectives on “Responsible AI” and discusses pressing governance challenges for AI and AI systems for the next decade from a global and transdisciplinary perspective. The exchange with distinguished scholars from different continents (Asia, Australia, USA, and Europe) and from different disciplines (AI, computer science, medicine, neurosciences, philosophy, and law) at the June 2020 virtual conference provided an excellent basis to find new interdisciplinary answers and perspectives on questions of responsible AI governance and regulation.

The book will be structured by the following eight sections:

- Foundations of Responsible AI

- Current and Future Approaches to AI Governance

- Responsible AI Liability Schemes

- Fairness and Non-Discrimination in AI Systems

- Responsible Data Governance

- Responsible Corporate Governance of AI Systems

- Responsible AI Healthcare and Neurotechnology Governance

- Responsible AI for Security Applications and in Armed Conflict

You can find videos of selected presentations of the virtual conference here.

Video-SectionInterdisciplinary Perspectives

Contributors from different disciplines explore the foundational and normative aspects of Responsible AI and provide a basis for a transdisciplinary approach to Responsible AI

A look at the contents of the book:

Part I – Foundations of Responsible AI

The moral proxy problem

Johanna Thoma describes the ‘moral proxy problem,’ which arises when an autonomous artificial agent makes a decision as a proxy for a human agent.

The relationship of AI and humans

Christopher Durt argues that rather than being a tool, artificial subject, or simulation of human intelligence, AI is defined by its interrelational character.

Artificial morality and machine ethics

Catrin Misselhorn provides a basis for what Responsible AI means by laying down conceptual foundations of artificial morality and discussing related ethical issues.

AI delegation and supervision

Jaan Tallinn and Richard Ngo propose an answer to the question of what Responsible AI means.

Overview of key technologies

Wolfram Burgard provides an overview of the technological state of the art from the perspective of robotics and computer science.

Part II – Current and Future Approaches to AI Governance

China’s AI Regulation

Weixing Shen and Yun Liu focus on China’s AI laws, in particular, the Chinese Data Security Law, the Chinese Civil Code, the E-Commerce Law, and the Personal Information Protection Law.

Towards a global AI Charter

Thomas Metzinger lists the main problem domains related to AI systems from a philosophical angle.

Intellectual debt

Jonathan Zittrain argues society’s movement from basic science towards applied technology that bypasses rigorous investigative research inches us closer to a world in which we are reliant on an oracle AI.

Regulating high-risk AI products and services

Thorsten Schmidt and Silja Voeneky propose a new adaptive regulation scheme for AI-driven high-risk products and services as part of a future Responsible AI governance regime.

The new EU Regulation on AI

Thomas Burri examines how general ethical norms on AI diffuse into domestic law without engaging international law.

AI and democracy

Mathias Risse reflects on the medium and long-term prospects and challenges democracy faces from AI.

Part III – Responsible AI Liability Schemes

A critical evaluation of the European approach to AI

Jan von Hein analyses and evaluates the European Parliament’s proposal on a civil liability regime for AI against the background of the already existing European regulatory framework on private international law.

Liability for AI

Christiane Wendehorst analyses the different potential risks posed by AI as part of two main categories, safety risks and fundamental rights risks, and considers why AI challenges existing liability regimes.

Part IV – Fairness and Non-Discrimination

The legality of discriminatory AI

Antje von Ungern-Sternberg focuses on the legality of discriminatory AI, which is increasingly used to assess people (profiling).

Statistical discrimination by means of computational profiling

Wilfried Hinsch argues that because AI systems do not rely on human stereotypes or rather limited data, computational profiling may be a better safeguard of fairness than humans.

Part V – Responsible Data Governance

AI as a challenge for data protection

Boris Paal also identifies a conflict between two objectives pursued by data protection law, the comprehensive protection of privacy and personal rights and the facilitation of an effective and competitive data economy.

Data governance and trust

Sangchul Park, Yong Lim, and Haksoo Ko, analyse how South Korea has been dealing with the COVID-19 pandemic and its legal consequences. The chapter provides an overview of the legal framework and the technology which allowed the employment of this technology-based contact tracing scheme.

AI and the right to data protection

Ralf Poscher sets out to show how AI challenges the traditional understanding of the right to data protection and presents an outline of an alternative conception that better deals with emerging AI technologies.

Part VI – Responsible Corporate Governance of AI Systems

AI and anti-trust

Stefan Thomas shows how enforcement paradigms that hinge on descriptions of the inner sphere and conduct of human beings may collapse when applied to the effects precipitated by independent AI-based computer agents.

AI in financial services

After outlining different areas of AI application and different regulatory regimes relevant to robofinance, Matthias Paul analyses the risks emerging from AI applications in the financial industry.

AI as a challenge for corporations and their executives

Jan Lieder argues that whilst there is the potential to enhance the current system, there are also risks of destabilisation.

Part VII – Responsible AI in Healthcare and Neurotechnology

The emergence of cyberbilities

Boris Essmann and Oliver Mueller address AI-supported neurotechnology, especially Brain-Computer Interfaces (BCIs).

Neurorights

Philipp Kellmeyer sets out a human-rights based approach for governing AI-based neurotechnologies.

Key elements of medical AI

The legal scholars Fruzsina Molnár-Gábor and Johanne Giesecke begin by setting out the key elements of medical AI.

Legal challenges for AI in healthcare

Christoph Krönke focuses on the legal challenges healthcare AI Alter Egos face, especially in the EU.

Part VIII – Responsible AI for Security Applications and in Armed Conflict

Morally repugnant weaponry

Alex Leveringhaus spells out ethical concerns regarding autonomous weapons systems (AWS) by asking whether lethal autonomous weapons are morally repugnant and whether this entails that they should be prohibited by international law.

Responsible AI in war

Dustin Lewis discusses the use of Responsible AI during armed conflict. The scope of this chapter is not limited to lethal autonomous weapons but also encompasses other AI-related tools and techniques related to warfighting, detention, and humanitarian services.

AI, law, and national security

Ebrahim Afsah outlines different implications of AI for the area of national security. He argues that while AI overlaps with many challenges to the national security arising from cyberspace, it also creates new risks.

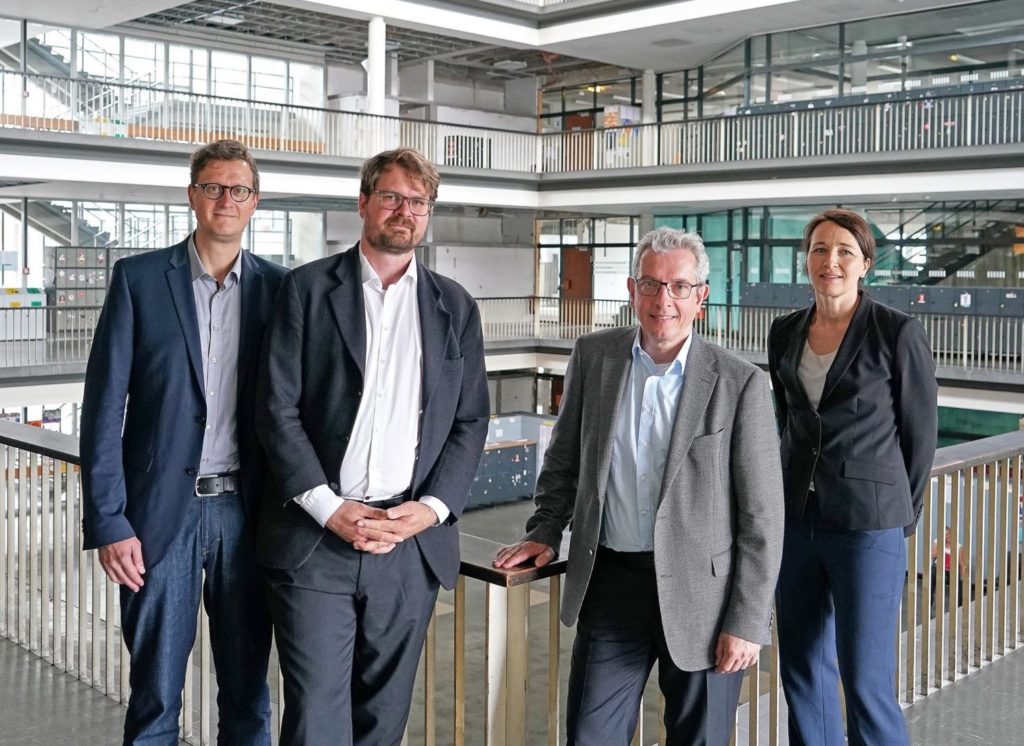

Editors

Prof. Dr. Silja Voeneky

Professor of Public International Law, and Comparative Law at the University of Freiburg and Senior Fellow at the 2018–2021 FRIAS Saltus Research Group Responsible AI

Dr. Phillip Kellmeyer

Neurologist and neuroscientist at the University Medical Center, University of Freiburg, and Senior Fellow at the 2018–2021 FRIAS Saltus Research Group Responsible AI

Prof. Dr. Oliver Müller

Professor of Philosophy with a focus on technology and on contemporary philosophy, University of Freiburg, and Senior Fellow at the 2018–2021 FRIAS Saltus Research Group Responsible AI

Prof. Dr. Wolfram Burgard

Professor of Computer Science at the University of Nuremberg and Senior Fellow at the 2018–2021 FRIAS Saltus Research Group Responsible AI

Team

Alisa Pojtinger – Research Assistant

Daniel Feuerstack – Research Assistant

Jonatan Klaedtke – Research Assistant

Acknowledgements

The Cambridge Handbook of Responsible Artificial Intelligence

Interdisciplinary Perspectives

2022 – Open Access!

more